TQ Level Up

What makes up the live EVE Cluster and how it's all done is something of a mystery to many who have speculated what it's made of and how it's all connected together.

As you may know the Tranquility (TQ) cluster will be down for maintenance on Wednesday, June 23, 2010 from 0900 to 1500 UTC.

With a migration and a bit of a redesign on the way I thought it was about time to deliver the facts on what it is and will be.

Step one: A cozy new home

TQ has morphed and adjusted over the years as much as EVE Online has. It's gotten to the point were a couple of cabinets simply don't handle it anymore. So, this first step is to move TQ to a bigger place. We'll still be in the same datacenter and connecting to you from multiple networks to ensure the best performance, but this time with a lot more space and power and room to grow.

The new space is a whopping 79kW of power across 12 cabinets. With the larger space and added power, we can now aggregate TQ, Singularity, and the ancillary EVE Services (web, forums, account management, etc.) into a single location in the datacenter. This will provide better network connectivity, fewer intermediary devices and increased capacity.

As with any dense computer solution like our blade servers, heat is always a major concern. Sure, we get great management tools and reduced physical space requirements, but we still have to cool the servers. To do this we've moved from an ambient cooled system (basically the open room temperature is managed but not funneled direct to server intakes) to a completely self-contained, closed aisle cooling system. Cold air from the center of the aisle is force-fed into the cabinets reducing the loss or wasted cool air significantly and helping to focus cold air where it's needed most. This takes the industry standard "hot aisle/cold aisle" designs a step further without having to do anything crazy like running servers under nitrogen pools (although that is pretty cool).

Step two: Networking to 9000

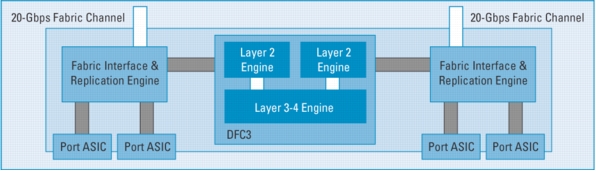

Most of the traffic on the network in TQ is happening between the servers on the internal network. While the routers we use are quite powerful (Cisco 7600's with the RSP720 route processors), our internal switching needed a kick in the pants. With the move we are going to be adding about 800% capacity to our side to side network along with some really nice Cisco Distributed Forwarding Cards (DFC3) to the network blades themselves to help reduce the latency and reduce burden on the supervisor cards that run the switches.

Step three: Pics or it didn't happen

We are going to continue the information sharing about the infrastructure that makes EVE work on the next installment. Although not everyone gets excited about cabinets and a datacenter, there are a few that do. I personally keep them posted on my wall at home. This is meant to be the first of many installments as we continue to improve the infastructure that EVE runs on.

Step four: But, how does this help me get my ship back?

The increase in Layer 2 switching capacity, reducing in latency through Distributed Forwarding, and the extra cold hamsters will have an impact on the ability to reduce overall latency in EVE. It is not a single solution, but a good foundation where core infrastructure can be eliminated as a possible concern.

The next tech installment will have more details on Remapping EVE, the next level of Fleet Fighting and better prediction of hot spots for dedicated nodes.

TQ Tech Details: (Not the whole system, just what runs TQ)

Servers

64 x IBM HS21

2x Dual Core 3.33GHz CPU's

32GB of RAM Each

1x72GB HDD Each

2 x IBM X3850 M2's

2x Six Core 2.66GHz

128GB of RAM

4 x 146GB HDD

Cores

- 280 total Cores

- ~1 THz

RAM

- 2.3TB of Total RAM

Storage

- 4.8TB of Local Storage

- 2TB of SSD SAN

- 256GB of RAM SAN

Network

- Gigabit Ethernet

- 4Gb/s Fiber Channel

So what will June 23 look like?

Here's our current downtime schedule for when TQ will be offline:

0900: All EVE Services go offline. (Web, Forums, Test Servers, EVE Gate, TQ, basically everything hosted in London)

1200: EVE Online web, secure and Test Servers come back online. (all network services reestablished in London. Only TQ should still be down at this time)

1500: TQ back online